With the recent release of Llama3, I thought I would revisit the world of Artificial Intelligence (AI). There are a few terms that you need to know when it comes to modern AI implementations. One such term is large language models or LLMs. But before I describe what an LLM is, we should first define what an AI model is. An AI model is a program that has been trained on a set of data to recognize certain patterns or make certain decisions without further human intervention. AI models apply different algorithms to relevant data inputs to achieve the tasks, or output, they’ve been programmed for.

So what is an LLM? LLMs are a category of foundation models trained on immense amounts of data making them capable of understanding and generating natural language and other types of content to perform a wide range of tasks.

Llama 3 is an open source LLM that you can use locally on your computer for free. There is no need to connect to the web and use an LLM that you have to pay for. Llama 3 is one of the best open models that’s on par with the best proprietary models available today. Did I mention that it was free?

My intended focus was going to be about using AI agents for coding, but it is actually very interesting to see what you can do with Llama3. I will write another post about AI coding agents later.

I initially downloaded Llama3 from its home site, but I didn’t know how to use it. It was like getting crude oil and then needing to process it. Well that analogy is a bit off the mark since Llama3 isn’t crude, but refined. But I needed some way to use it, like using a gas pump to dispense the refined product of crude oil. There are a number of ways to use Lllama3 locally and a few ways that I found were the applications Ollama, LMStudio, and GPT4All. I downloaded them all and installed them on my Mac Studio. They all looked pretty good, but I decided to go with Ollama. Note that all of them don’t just work with Llama3, but can be used with other LLMs like Mistral and Gemma.

Ollama had a number of things going for it. To name a few, it is open source, it has a command line interface (CLI), it has a server that you can access with other programs, it has two programming libraries (one for Python and one for JavaScript), it has documentation, and you can download and use various models using it. Ollama is written in the Go programming language (GoLang). It is a Go interface to Llama.cpp.

What is Llama.cpp? LLaMa.cpp was developed by Georgi Gerganov. It implements the Meta’s LLaMa architecture in efficient C/C++, and it is one of the most dynamic open-source communities around the LLM inference with more than 390 contributors, 43000+ stars on the official GitHub repository, and 930+ releases.

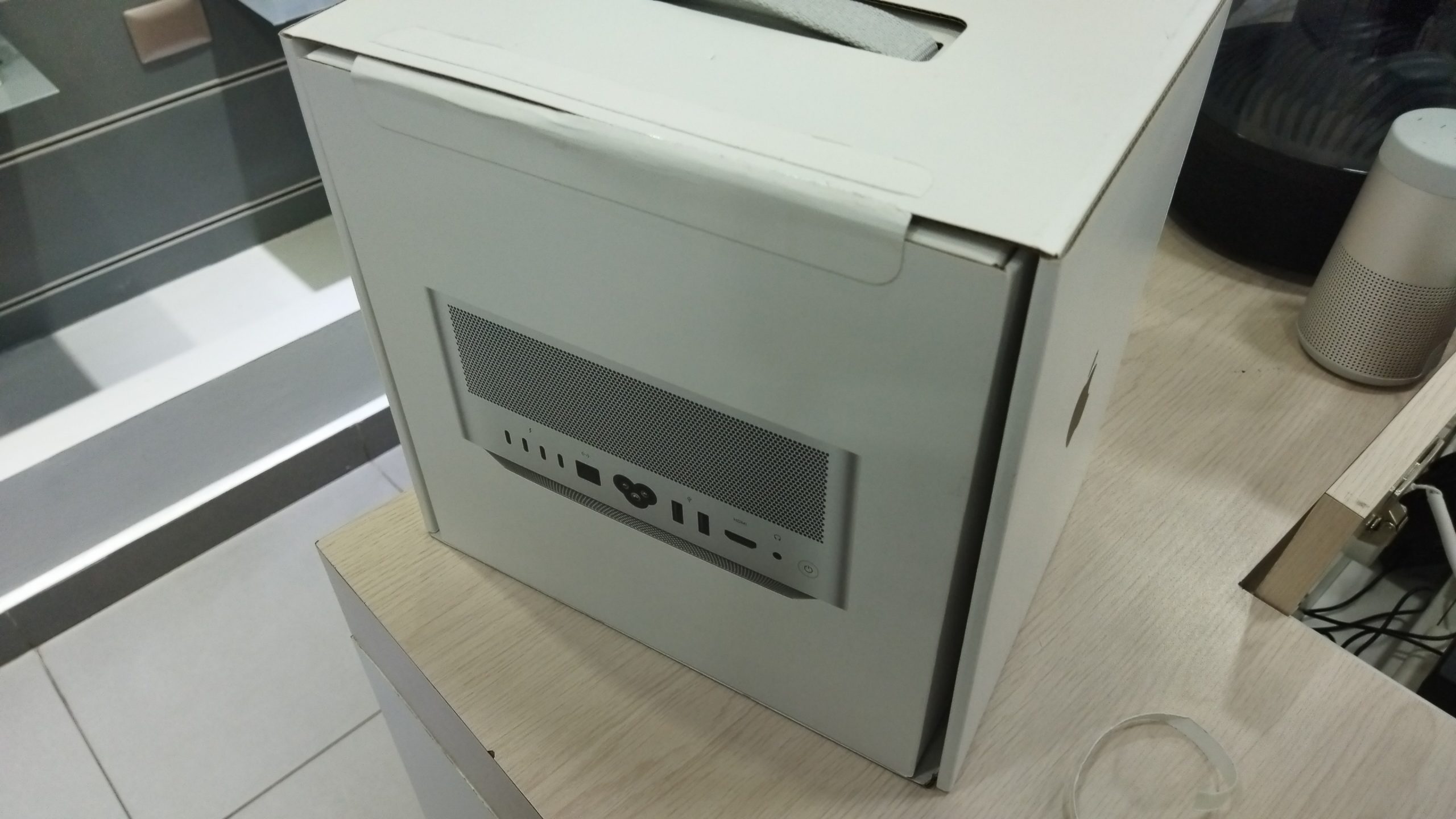

Ok, enough background information, let’s get back to Ollama. First thing is we have to download Ollama. It is available for macOS, Linux, and Windows (preview). I am going to be running it on my Mac Studio, so I will be getting the macOS version (requires macOS 11 Big Sur or later).

Before we run Ollama for the first time, we should look into some of its default configurations. The one thing that comes to mind is where will the LLMs that you download using Ollama be put. I downloaded all of the Llama3 models from the Meta website onto my boot drive and I soon ran out of space. They were pretty large. I didn’t really need all of them, so deleted them and I just downloaded the Llama3 8B model onto another drive. By default on the Mac, Ollama stores its files in your user home directory in a sub-directory named .ollama (~/.ollama). And the Ollama models directory is ~/.ollama/models. So by default all of the models that you download using Ollama will end up in that directory which is once again my boot drive. I need to change that to my other drive which has a lot more space.

In the Ollama FAQ we can see how to change the Ollama configuration. Since I am running Ollama on macOS, I need to set the environment variables that the Ollama server using by using the command launchctl. The environment variable that we want to change for where the Ollama models are located is called OLLAMA_MODELS and here how we change it:

launchctl setenv OLLAMA_MODELS /WDrive/AI/ollama_models

After we set the variables we need to, we will restart Ollama. But before I restart my Ollama instance, I need to remove the Llama3 model I have in the default directory. For someone who hasn’t downloaded a model, they won’t have to do this. I am going to list the models I currently have and I only have a llama3 model.

martin$ ollama list

NAME ID SIZE MODIFIED

llama3:latest 71a106a91016. 4.7 GB 41 hours ago

If you want to know what parameters that Ollama accepts, then run it with the help parameter.

martin$ ollama help

Large language model runner

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model from a Modelfile

show Show information for a model

run Run a model

pull Pull a model from a registry

push Push a model to a registry

list List models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, –help help for ollama

-v, –version Show version information

Use “ollama [command] –help” for more information about a command.

I am going to remove the model, change the default models directory, and then restart ollama.

martin$ ollama rm llama3

deleted ‘llama3’

martin$ ollama list

NAME ID SIZE MODIFIED

martin$launchctl setenv OLLAMA_MODELS /Drive2/AI/ollama_models

Check the Ollama website to see the available models that you can download from them. In order to download a model using Ollama, we will use the run command. This command will run the model, but it will first download the model if it isn’t on your machine and then run it. This will put us in the Read-Eval-Print-Loop (REPL) prompt (>>>) where the model is waiting for questions.

martin$ ollama run llama3

pulling manifest

pulling 00e1317cbf74… 100% ▕█████████████████████████████████████████████████▏ 4.7 GB

pulling 4fa551d4f938… 100% ▕█████████████████████████████████████████████████▏ 12 KB

pulling 8ab4849b038c… 100% ▕█████████████████████████████████████████████████▏ 254 B

pulling 577073ffcc6c… 100% ▕█████████████████████████████████████████████████▏ 110 B

pulling ad1518640c43… 100% ▕█████████████████████████████████████████████████▏ 483 B

verifying sha256 digest

writing manifest

removing any unused layers

success

>>> Send a message (/? for help)

>>> Why is the sky blue?

What a great question!

The short answer: The sky appears blue because of a phenomenon called scattering, which is the interaction

between light and tiny particles in the Earth’s atmosphere.

Here’s a more detailed explanation:

1. **Sunlight**: When sunlight enters our atmosphere, it contains all the colors of the visible spectrum

(red, orange, yellow, green, blue, indigo, and violet).

2. **Molecules and particles**: The atmosphere is filled with tiny molecules like nitrogen (N2) and oxygen

(O2), as well as aerosols like water droplets, dust, and pollutants.

3. **Scattering**: When sunlight hits these molecules and particles, it scatters in all directions. This

scattering effect is more pronounced for shorter wavelengths of light, such as blue and violet.

4. **Blue dominance**: As the scattered light reaches our eyes, we perceive the sky as blue because the

blue light has been scattered more than other colors (like red and orange). This is why the sky typically

appears blue during the daytime, when the sun is overhead.

Some additional factors that contribute to the sky’s color:

* **Atmospheric conditions**: The color of the sky can be affected by atmospheric conditions like

pollution, dust, water vapor, and temperature gradients.

* **Clouds**: Clouds can reflect or absorb light, changing the apparent color of the sky. For example,

thick clouds can make the sky appear more gray or white.

* **Time of day**: The color of the sky changes during sunrise and sunset due to the angle of the sun and

the scattering of light by atmospheric particles.

Now you know why the sky is blue!

Entering /? at the chat prompt will tell you the available command that the Ollama REPL will accept.

>>> /?

Available Commands:

/set Set session variables

/show Show model information

/load <model> Load a session or model

/save <model> Save your current session

/bye Exit

/?, /help Help for a command

/? shortcuts Help for keyboard shortcuts

Use “”” to begin a multi-line message.

In addition to using /bye to exit out of the Ollama REPL, ctrl-d will exit too. That’s it for now. I will post more about using Ollama and Llama3 later.