I recently said that a firmware update can do wonders. But a firmware (or BIOS) update can cause problems too. 🙁

The BIOS version that I was running on my Gigabyte Z390 M Gaming motherboard was 8, whereas the latest version is 9M. I was having an issue where my new NVMe drive that I cloned Catalina on to, was not recognized as a boot option. It didn’t think it was a UEFI OS like the old NVMe was. It had to be an UEFI OS since I had cloned it with CCC 5, right? I thought I should get the latest BIOS version and update my motherboard. The Bios updates come with a utility that allows you update the BIOS, but you need to be running Windows to use the utility. My motherboard has a feature in the Bios called Q-Flash which allows you to update the Bios while you are running it. No need to use Windows.

The Q-Flash utility allows you to read the new BIOS update from an external disk. I loaded version 9M on to a USB stick and booted into the BIOS. Running Q-Flash was easy enough. You can also save a backup BIOS version too, I suspect that it is in case your update fails and you can’t start the motherboard with the newly installed update. I selected the update file from a file menu of sorts inside Q-Flash and then ran the update. Q-Flash said that the install was successful. I then rebooted and went back into the BIOS to check. I was now running version 9M and I could see all drives as boot options.

But my new NVMe drive was still not listed as being a UEFI OS. I then exited the BIOS and booted into the coreboot menu. The current default selection was my new NVMe, so I let the process continue. Never made it into Catalina. An error was displayed and it said:

OCS: No schema for KeyMergeThreshold at 2 index, context <Input>!

OC: Failed to bootstrap IMG4 values – Invalid ParameterHalting on critical error

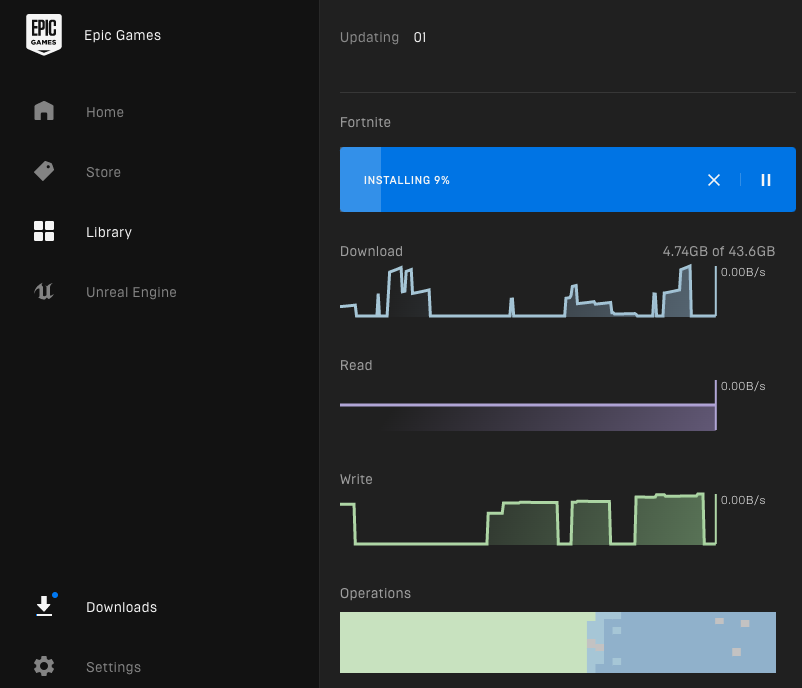

I then tried the old NVMe and it also stalled in the boot up process before it ever got to the Apple! No error messages that I could see. I then reverted the BIOS back to version 8 and tried to boot with both of the NVMe drives and the same thing happened. I then reinstalled BIOS version 9M figuring that it wasn’t the BIOS upgrade that caused my issue.

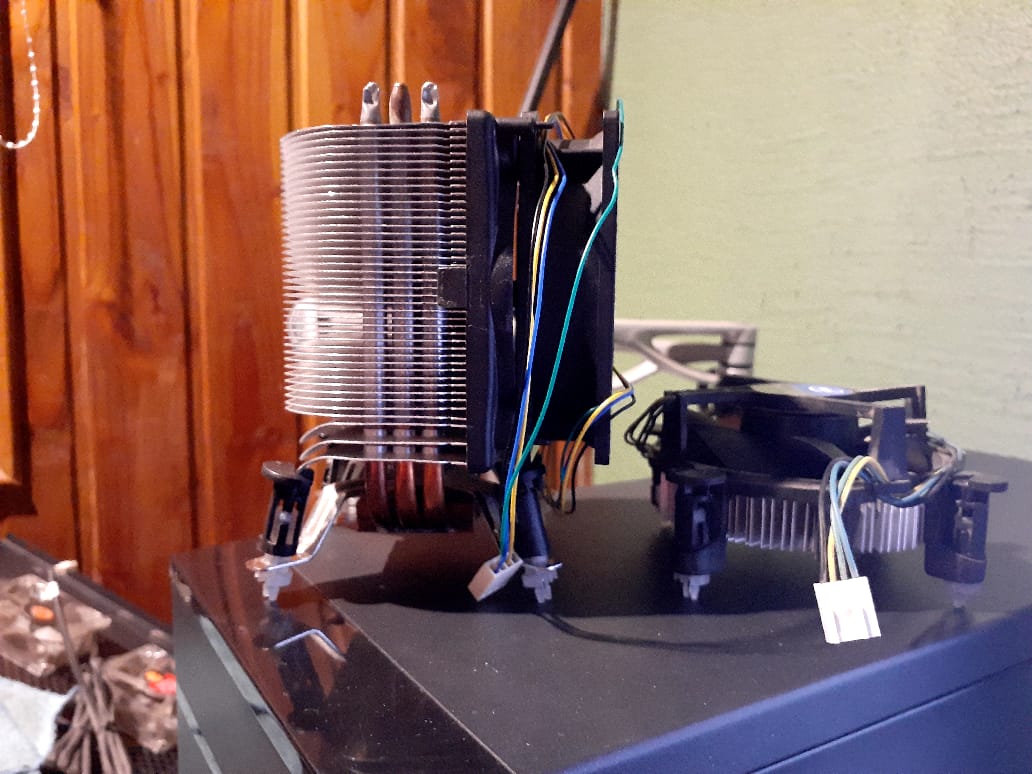

I went to another computer so I could search on the internet for a possible solution to my boot up issue. As far as the No schema error, I was able to find some information. It seems that in Opencore 0.6.7, KeyMergeThreshold it no longer is used and should be removed from the config.plist file. But it still doesn’t really explain my boot stalling issue. After looking at various things, there was something that helped me figure out what happened. When I first installed Catalina and tried to boot up, it too stalled. It was just like what was happening now. I went back into the BIOS and checked on those settings that I had to previously change. I didn’t think about it, but a good number of those settings had changed. With a new BIOS there were new defaults (except Fast Boot was False this time around). And like the last time, once I made those BIOS changes I was able to boot into Catalina again. Using the opencore menu I was able to boot into Catalina on all three of the devices that I had it on. The three devices were my two NVMe drives and the old HACK1 boot drive which I had cloned the old NVMe drive to.

But still there was the question of why the new NVMe drive wasn’t listed as being UEFI OS like the old one. I figured out that CCC 5 didn’t make a complete clone from the old NVMe drive. Using command line magic, I mounted the EFI volume/partition on each of the NVMe drives. Checking the new NVMe I saw the the EFI volume/partition was empty. I then copied the files in the EFI volume/partition from the old NVMe to the new NVMe’s EFI volume/partition. I rebooted into the BIOS and checking the Boot menu I could now see that the new NVMe was now also UEFI OS like the old NVMe. I don’t know if CCC 5 has trouble with APFS. Or maybe since I was copying from APFS case sensitive to APFS case insensitive, there was an issue. Anyway, that problem was solved.

Side note: Later on I also did the same thing for the Samsung 850 EVO drive that I cloned using the old NVMe drive. It too was missing the contents of the EFI volume/partition.

The last thing I needed to do was to get rid of that “No schema for KeyMergeThreshold” error. This called for editing the config.plist file and removing the line with KeyMergeThreshold. You can’t edit the config.plist file directly in the EFI volume/partition, so I made a copy where I could edit the file. I opened up my copy of the config.plist file in Xcode to remove the line. The KeyMergeThreshold line is located in UEFI->Input. I deleted the line and saved the changes. I then delete the original file and copied the edited file into the EFI volume/partition. I rebooted the machine to see if I could see the error still. Because the error message just flashes on the screen and doesn’t stop, I had to keep an eye out for it. And it did not appear! Success! That evens the score with the BIOS. 🙂 My next task will be to see if I can install Win 10 on that older NVMe drive while using opencore. I will have to do some research on it. Until next time.